I talk to "AI-curious" people just about every day, and almost without fail the first thing they ask me (after they tell me they're sick of the AI hype):

I would probably use it more but I apparently suck at prompting.

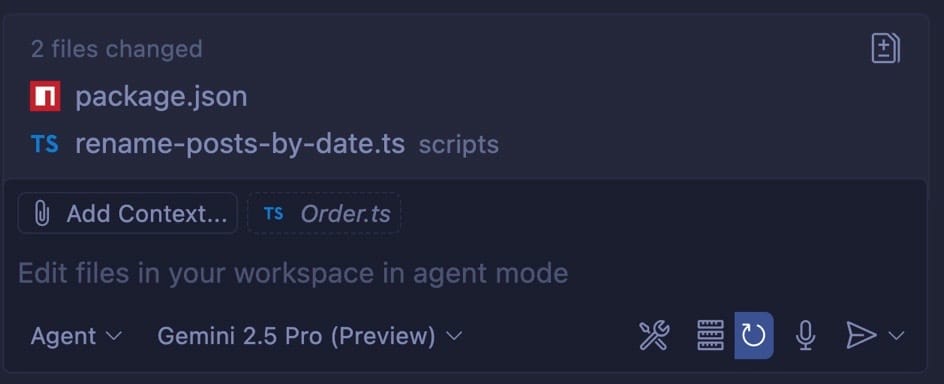

I blame this issue on the people who make the tools: there are too many engineers involved in the process. This was one of the main problems I had at Microsoft - trying to get the engineers working on the Copilot extension to unload some of the "visual weight" of the prompt box:

Even the placeholder here doesn't make sense: Edit files in your workspace in agent mode. What's "agent mode" and how is it going to edit my files? For someone new to all this, that doesn't sound exciting (having AI edit your files for you).

I don't mean to unload on the VS Code team. They're great people whom I consider friends. They would be the first to admit that the UX needs some polish and that they'll get there. That's great - it's my job (at least now) to nudge that process along.

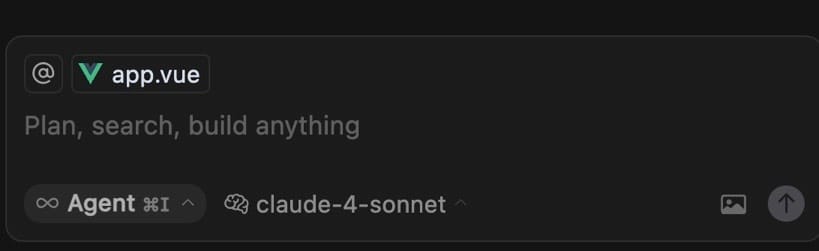

Cursor is only slightly better, but even then their placeholder is meaningless nonsense:

Let's (Re)Start Here

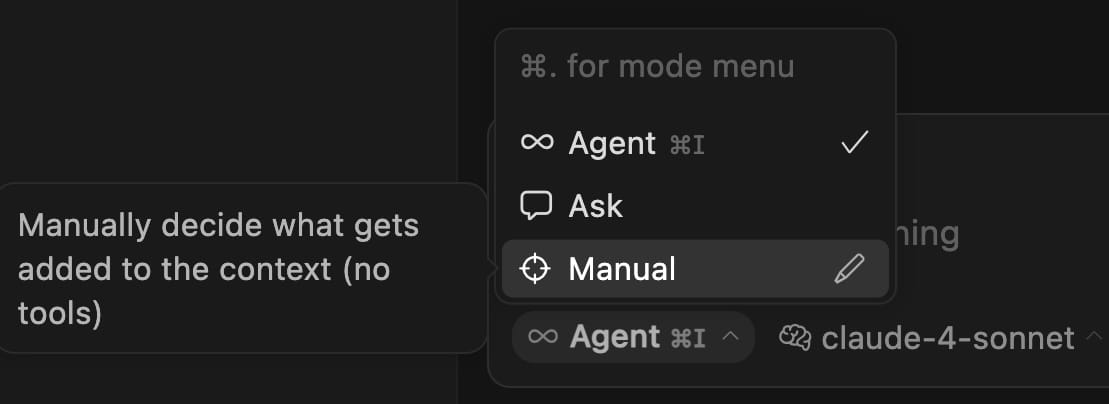

Perhaps the thing that frustrates me the most is the engineers are allowed to name things. You have "modes" like "Agent", "Edit", and "Ask". Those aren't modes! One is a noun and the last two are verbs.

Cursor does kind of the same thing, but somehow causes more confusion:

Manually decide what now?

Let's be constructive. The main difference between these "modes" is that you can explore (Ask) and then have the AI tool actually perform edits and tweak your code for you. Why are these "modes"? It's more of a process where you explore and then execute.

There's a marketing aphorism that I love, and I think it's playing out realtime with the AI adoption in code editors:

Confusion means "no"

If you confuse your customer in any way, they will simply walk away because people don't like to be confused. It's uncomfortable. What they want is simplicity and to feel like what they're doing with your tool is simple and therefore elegant. Which also means "powerful".

Loading up the visual field with icons and meaningless text is not simple. Slicing up a simple workflow into "modes" that the user has to understand before doing something that is quite natural (asking a question) is the opposite of simple.

My entire point here is that if you're feeling confused, you're not alone and it's not at all your fault. We could have done better here. All of that said: let's see how to work with what we have.

The First Rule of Prompting: It's a Machine

One of the most difficult things to remember is that whatever LLM you choose is just a machine algorithm. It uses statistics to figure out an appropriate answer, and relays it to you in an all-too-human tone.

This makes it difficult to remember that you're querying, not conversing. There is no person answering your question, which means you must let go of conversational norms.

To that end, you:

- Don't have to say "please"

- Don't have to structure your sentences or "ask" for anything. Tell it what to do, clearly.

- Can use what I like to call "some pepper" if things aren't going right. For some reason, it's programmed to understand your tone.

The Second Rule of Prompting: Knowing What You Don't Know

Most programmers expect AI to generate code which will probably be "crappy". If there's an inverse to the AI hype, it's AI FUD.

AI is a tool that you can and should use to increase your efficiency and therefore your value to your client or company. That means you need to experiment to see where you can maximize this help.

Here are some ideas for you:

- Make it generate your commit message for you. You'll want to customize how this is done, and we'll go over that next.

- Have it create utility scripts to do that annoying task you've been meaning to do. For instance: if you have a blog, you can create a task to find 404 image links or resize big files.

- Use it to think through a domain problem. For instance: I used it recently to scheme out an email sequencer for my mailing list.

Use AI to explore. Have it run tasks for you that you don't want to do and never assume that it can't do something or just won't know what you mean.

Let's look at an example. One of the tasks I always put off is creating a remote repository on GitHub for a new local project I'm working on. I have the GitHub CLI tool installed (gh) but I just haven't taken the time to learn it.

This is the perfect task for AI. Here I'll use Copilot in "Ask" mode to see what it will create for me:

create a bash script that will create a GitHub repo for this project using the gh CLI tool, and add the remote repo to the local one as "origin"There are a few things to notice about this prompt:

- It's terse and direct.

- I've spelled out a few details that are important: using the CLI tool, adding a remote repo, and finally the name.

- The prompt is small.

If you imagine your LLM is a 15 year old programmer, it really helps. You have to spell things out a bit more and do it in simple language. The more you do it, the easier it gets.

Here's the result by the way:

#!/bin/bash

# filepath: create-github-repo.sh

# 🚀 Creates a GitHub repository and adds it as origin remote

# Get the current directory name for the repo name

REPO_NAME=$(basename "$PWD")

echo "Creating GitHub repository: $REPO_NAME"

# Create the GitHub repository (private by default)

gh repo create "$REPO_NAME" --private --source=. --remote=origin --push

echo "✅ Repository created and remote 'origin' added successfully!"

echo "🔗 Repository URL: https://github.com/$(gh api user --jq .login)/$REPO_NAME"It's likely you won't get exactly what you need the first time, and that's OK too! Maybe I don't want the emoji here? That's OK - just add a new prompt that says exactly that: remove the emoji. Don't worry about being nice, just get the work done.

The Third Rule of Prompting: Customize!

When I was at Microsoft giving Copilot workshops to internal engineering teams, I would repeat the same phrase perhaps 10 times:

The best prompt is the smallest prompt

Customizing your AI sessions is absolutely paramount to getting answers that make sense! Not only that, but if you're instructions are clear and concise enough, you can get away with writing smaller prompts because you don't have to include all of that context.

The reason you see emoji in the script above is because I have custom instructions set up for the project. In the instruction file I have added this:

## Code Style

Every method should have a simple comment that explains what it does. Don't do this for private methods, only public. Add emoji.There are other things too, but this is the biggest one. Go back up and read the script - Copilot did exactly what I asked it to do.

Here's how to set these things up:

- If you're using Copilot, create a directory and markdown file in the root of your workspace at

.github/copilot-instructions.md. In that file you let Copilot know your styles, domain context, and more. I'll talk about that in a minute. - Cursor allows you to create rules that get applied based on configuration settings. They work much the same as Copilot, with some slight variations but in the end it's just markdown with some stored prompts.

- Claude Code (which I'll talk about in another post) will look for a

CLAUDE.mdfile in the project root. Same deal: text that gets prepended to your prompts to provide context.

These instructions are incredibly powerful. Here are more instructions from the project I discussed above, but this time they're about testing. I'm using Jest to test some Vue code, and I have a particular way I want the tests written, so I'm giving Copilot a template:

Use this exact pattern for all tests:

```ts

import { someMethod } from "./some_service.ts";

//The happy path, when everything works

describe("The thing I'm trying to test", () => {

//arrange

let testThing;

beforeAll(async () => {

testThing = await someMethod();

});

//act

it("will initialize", async () => {

//make sure the testThing initializes properly

//assert

expect(testThing).toBeDefined();

});

//rest of tests go down here

});

//The sad path, with error conditions

describe("The things I'm trying to avoid", () => {

describe("Error conditions with initialization", () => {

//act

it("will throw an X type error with message Y", async () => {

expect(someMethod(badData)).toThrowError("Some message");

});

});

describe("Another set of error conditions", () => {

//act

it("will throw an X type error with message Y", async () => {

expect(someMethod(badData)).toThrowError("Some message");

});

});

//rest of tests go down here

});

```This took me a while to tweak and get right, which was actually a fun process. I would roll back whatever the agent created for me, tweak my instructions, and then try again.

I'll discuss templates and instructions more in later posts and videos.

Just Keep Prompting

The best way to get off the ground using LLMs is to keep using LLMs. This is a tool you will have to master because they save your company time and money, and there's no way you're going to talk people out of that, especially with the argument "yeah but it also generates crappy code we'll need to fix later".

You're already doing that, friendo. We're only human, we don't write very good code no matter how experienced we are. Take a look at some code you wrote 2 years ago and confirm that for yourself. Or, better yet, ask someone you respect and admire what they think about their own code from years back.

I'm not trying to say that LLMs will write better code. They'll just write code - it's up to you to shape it to how you need it, and you do that through practice.

Just like any tool.